How Big is Big? The Challenge of Handling Over 5 Million Records

When it comes to data migration, especially for large datasets, how big is too big? What if you're dealing with over 5 million records? This post explores the challenges and possible solutions for this predicament.

Data Migration: Excel vs RapidStart

Configuration Packages accept two types of files for data migration:

- Excel: Created by exporting a template. It has a maximum row limitation of 1,048,576.

- RapidStart: Also created by exporting a package, but appears to be a type of archive file.

So, if we need to import or export more than approximately 1 million records, what do we do?

The RapidStart Solution

After some investigation, it turns out that RapidStart files are essentially gzipped XML files. This opens up the possibility of using these files as an alternative to Excel.

Learning LINQ to XML

To explore this further, I dabbled in LINQ to create XML files from CSV. I followed the tutorials on Microsoft Learn, specifically "Working with LINQ - C#" and "Overview - LINQ to XML - .NET".

With some help from ChatGPT for coding, I developed two programs:

- The first program converts a CSV lists of Fixed Assets records into a RapidStart package.

- The second program exports all table data from any RapidStart package into a series of CSV files.

Both programs' source code can be found on GitHub:

A Guide for .NET C# Beginners

If you're new to .NET C# (as I am), here's a very basic guide on how you can compile and use the source code:

- Install .NET and Visual Studio Code.

- Add the PowerShell extension for VS Code.

- Open the terminal in VS Code, create a folder, navigate into the folder, and set up a sample project:

PS > md Hello-World

PS > cd .\Hello-World\

PS > dotnet new console

PS > code .

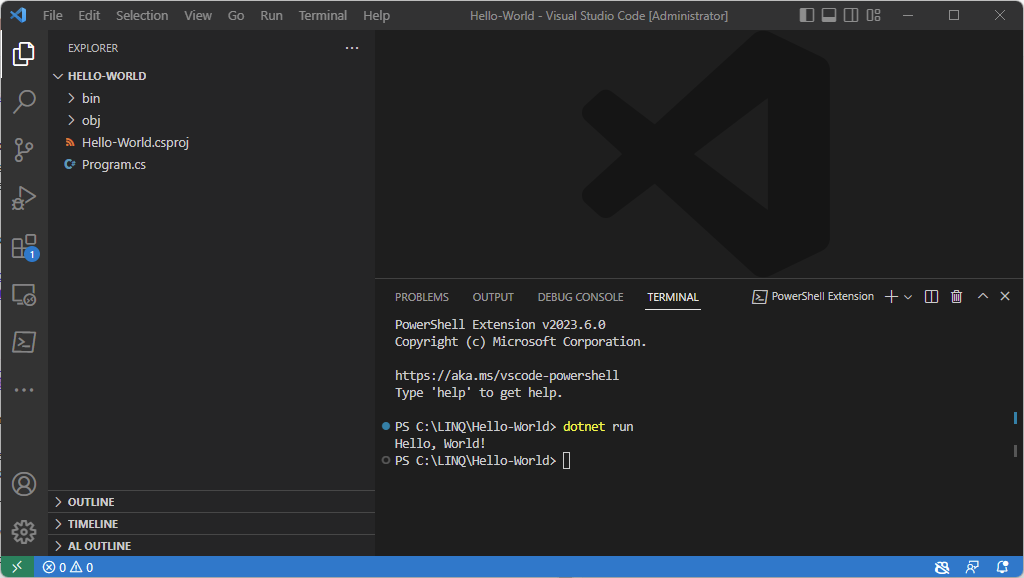

The last command will open VS Code to that project, you can then run the sample app:

PS > dotnet run

|

| Running the sample c# app from the PS terminal in VS Code |

To use the experimental apps I created simply replace the Program.cs source code with the code from GitHub, save the file, and run it again.

Performance Metrics: Fixed Asset Processing Times

Next, let's talk about the performance metrics. I conducted tests using 5k and 25k samples to calculate depreciation and journal posting times:

- For the 5k sample, the total processing time was 390 seconds, giving a per-record time of 78 ms.

- For the 25k sample, the total processing time was 1897 seconds, with a per-record time of 75.9 ms.

Future Considerations

The journal posting time could potentially be reduced by not having a balancing line for each asset but using one balancing line for the entire batch. This optimization is something to explore in the future.

What's Next?

In the future, I'll be looking into using the Job Queue to manage these long-running tasks more efficiently.